- Meeting Abstracts

- Open access

- Published:

28th Annual Computational Neuroscience Meeting: CNS*2019

BMC Neuroscience volume 20, Article number: 56 (2019)

K1 Brain networks, adolescence and schizophrenia

Ed Bullmore

University of Cambridge, Department of Psychiatry, Cambridge, United Kingdom

Correspondence: Ed Bullmore (etb23@cam.ac.uk)

BMC Neuroscience 2019, 20(Suppl 1):K1

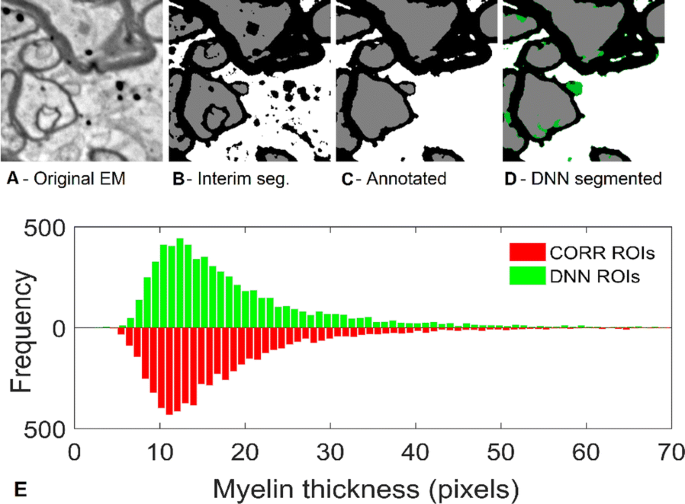

The adolescent transition from childhood to young adulthood is an important phase of human brain development and a period of increased risk for incidence of psychotic disorders. I will review some of the recent neuroimaging discoveries concerning adolescent development, focusing on an accelerated longitudinal study of ~ 300 healthy young people (aged 14–25 years) each scanned twice using MRI. Structural MRI, including putative markers of myelination, indicates changes in local anatomy and connectivity of association cortical network hubs during adolescence. Functional MRI indicates strengthening of initially weak connectivity of subcortical nuclei and association cortex. I will also discuss the relationships between intra-cortical myelination, brain networks and anatomical patterns of expression of risk genes for schizophrenia.

K2 Neural circuits for mental simulation

Kenji Doya

Okinawa Institute of Science and Technology, Neural Computation Unit, Okinawa, Japan

Correspondence: Kenji Doya (doya@oist.jp)

BMC Neuroscience 2019, 20(Suppl 1):K2

The basic process of decision making is often explained by learning of values of possible actions by reinforcement learning. In our daily life, however, we rarely rely on pure trial-and-error and utilize any prior knowledge about the world to imagine what situation will happen before taking an action. How such “mental simulation” is implemented by neural circuits and how they are regulated to avoid delusion are exciting new topics of neuroscience. Here I report our works with functional MRI in humans and two-photon imaging in mice to clarify how action-dependent state transition models are learned and utilized in the brain.

K3 One network, many states: varying the excitability of the cerebral cortex

Maria V. Sanchez-Vives

IDIBAPS and ICREA, Systems Neuroscience, Barcelona, Spain

Correspondence: Maria V. Sanchez-Vives (msanche3@clinic.cat)

BMC Neuroscience 2019, 20(Suppl 1):K3

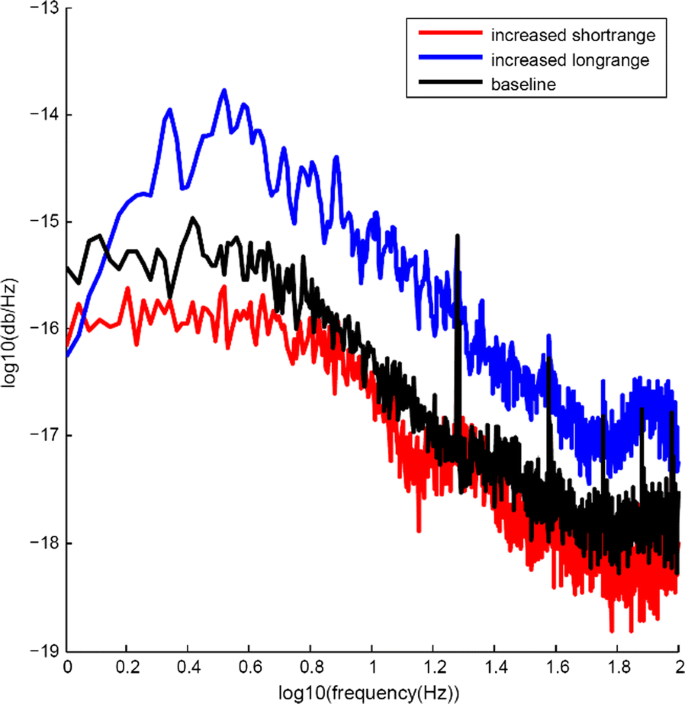

In the transition from deep sleep, anesthesia or coma states to wakefulness, there are profound changes in cortical interactions both in the temporal and the spatial domains. In a state of low excitability, the cortical network, both in vivo and in vitro, expresses it “default activity pattern”, slow oscillations [1], a state of low complexity and high synchronization. Understanding the multiscale mechanisms that enable the emergence of complex brain dynamics associated with wakefulness and cognition while departing from low-complexity, highly synchronized states such as sleep, is key to the development of reliable monitors of brain state transitions and consciousness levels during physiological and pathological states. In this presentation I will discuss different experimental and computational approaches aimed at unraveling how the complexity of activity patterns emerges in the cortical network as it transitions across different brain states. Strategies such as varying anesthesia levels or sleep/awake transitions in vivo, or progressive variations in excitability by variable ionic levels, GABAergic antagonists, potassium blockers or electric fields in vitro, reveal some of the common features of these different cortical states, the gradual or abrupt transitions between them, and the emergence of dynamical richness, providing hints as to the underlying mechanisms.

Reference

-

1.

Sanchez-Vives, M, Marcello M, Maurizio M. Shaping the default activity pattern of the cortical network. Neuron 94.5 (2017): 993–1001.

K4 Neural circuits for flexible memory and navigation

Ila Fiete

Massachusetts Institute of Technology, McGovern Institute, Cambridge, United States of America

Correspondence: Ila Fiete (fiete@mit.edu)

BMC Neuroscience 2019, 20(Suppl 1):K4

I will discuss the problems of memory and navigation from a computational and functional perspective: What is difficult about these problems, which features of the neural circuit architecture and dynamics enable their solutions, and how the neural solutions are uniquely robust, flexible, and efficient.

F1 The geometry of abstraction in hippocampus and pre-frontal cortex

Silvia Bernardi1, Marcus K. Benna2, Mattia Rigotti3, Jérôme Munuera4, Stefano Fusi1, C. Daniel Salzman1

1Columbia University, Zuckerman Mind Brain Behavior Institute, New York, United States of America; 2Columbia University, Center for Theoretical Neuroscience, Zuckerman Mind Brain Behavior Institute, New York, NY, United States of America; 3IBM Research AI, Yorktown Heights, United States of America, 4Columbia University, Centre National de la Recherche Scientifique (CNRS), École Normale Supérieure, Paris, France

Correspondence: Marcus K. Benna (mkb2162@columbia.edu)

BMC Neuroscience 2019, 20(Suppl 1):F1

Abstraction can be defined as a cognitive process that finds a common feature—an abstract variable, or concept—shared by a number of examples. Knowledge of an abstract variable enables generalization to new examples based upon old ones. Neuronal ensembles could represent abstract variables by discarding all information about specific examples, but this allows for representation of only one variable. Here we show how to construct neural representations that encode multiple abstract variables simultaneously, and we characterize their geometry. Representations conforming to this geometry were observed in dorsolateral pre-frontal cortex, anterior cingulate cortex, and the hippocampus in monkeys performing a serial reversal-learning task. These neural representations allow for generalization, a signature of abstraction, and similar representations are observed in a simulated multi-layer neural network trained with back-propagation. These findings provide a novel framework for characterizing how different brain areas represent abstract variables, which is critical for flexible conceptual generalization and deductive reasoning.

F2 Signatures of network structure in timescales of spontaneous activity

Roxana Zeraati1, Nicholas Steinmetz2, Tirin Moore3, Tatiana Engel4, Anna Levina5

1University of Tübingen, International Max Planck Research School for Cognitive and System Neuroscience, Tübingen, Germany; 2University of Washington, Department of Biological Structure, Seattle, United States of America; 3Stanford University, Department of Neurobiology, Stanford, California, United States of America; 4Cold Spring Harbor Laboratory, Cold Spring Harbor, NY, United States of America; 5University of Tübingen, Tübingen, Germany

Correspondence: Roxana Zeraati (roxana.zeraati@tuebingen.mpg.de)

BMC Neuroscience 2019, 20(Suppl 1):F2

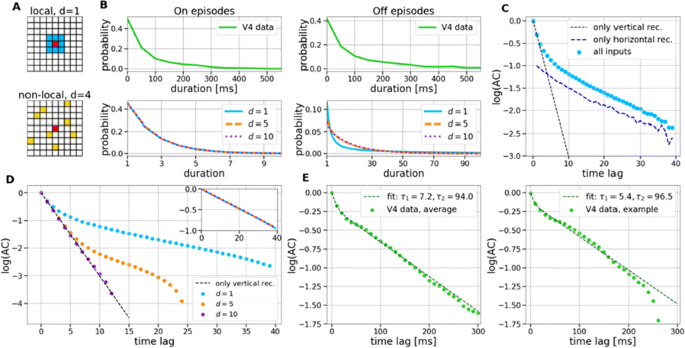

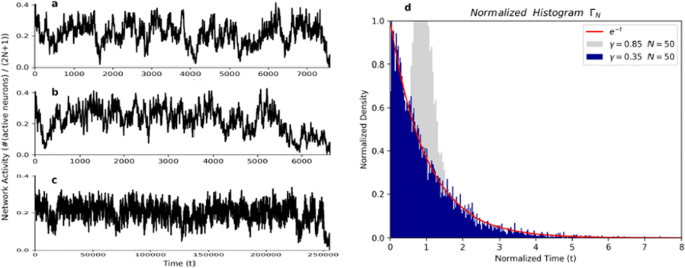

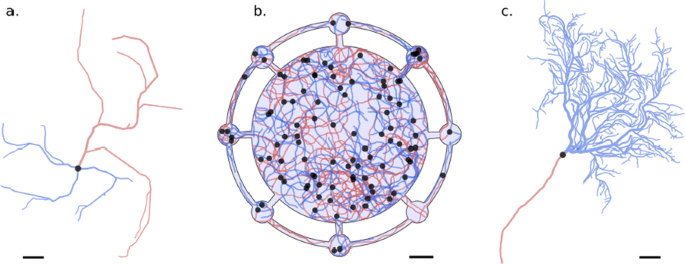

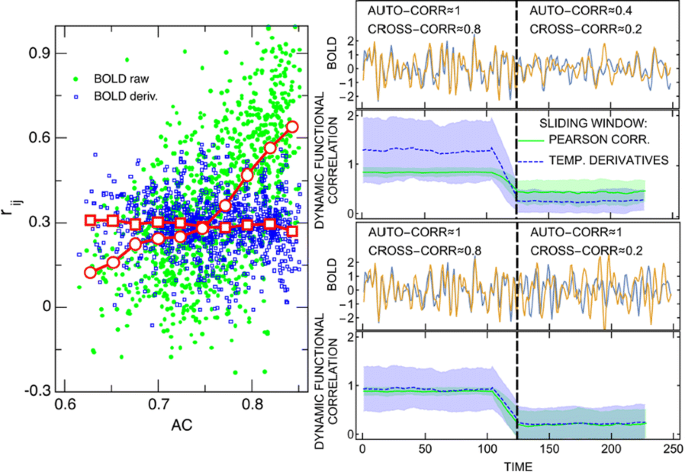

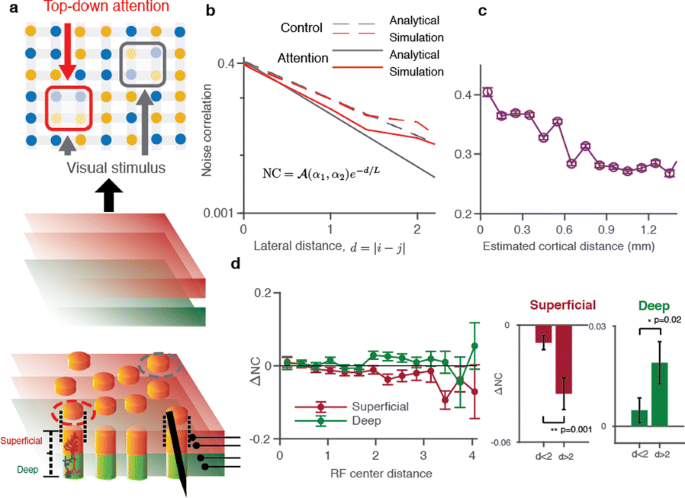

Cortical networks are spontaneously active. Timescales of these intrinsic fluctuations were suggested to reflect the network’s specialization for task-relevant computations. However, how these timescales arise from the spatial network structure is unknown. Spontaneous cortical activity unfolds across different spatial scales. On a local scale of individual columns, ongoing activity spontaneously transitions between episodes of vigorous (On) and faint (Off) spiking, synchronously across cortical layers. On a wider spatial scale, activity propagates as cascades of elevated firing across many columns, characterized by the branching ratio defined as the average number of units activated by each active unit. We asked, to what extent the timescales of spontaneous activity reflect the dynamics on these two spatial scales and the underlying network structure. To this end, we developed a branching network model capable of capturing both the local On-Off dynamics and the global activity propagation. Each unit in the model represents a cortical column, which has spatially structured connections to other columns (Fig. 1A). The columns stochastically transition between On and Off states. Transitions to On-state are driven by stochastic external inputs and by excitatory inputs from the neighboring columns (horizontal recurrent input). An On state can persist due to a self-excitation representing strong recurrent connections within one column (vertical recurrent input). On and Off episode durations in our model follow exponential distributions, similar to the On-Off dynamics observed in single cortical columns (Fig. 1B). We fixed the statistics of On-Off transitions and the global propagation, and studied the dependence of intrinsic timescales on the network spatial structure.

a Schematic representation of the model local and non-local connectivity. b Distributions of On-Off episode duration in V4 data and model. c Representation of different timescales in single columns AC. d Average AC of individual columns and the population activity (inset, with the same axes) for different network structures. e V4 data AC averaged over all recordings, and an example recording

We found that the timescales of local dynamics reflect the spatial network structure. In the model, activity of single columns exhibits two distinct timescales: one induced by the recurrent excitation within the column and another induced by interactions between the columns (Fig. 1C). The first timescale dominates dynamics in networks with more dispersed connectivity (Fig. 1A, non-local; Fig. 1D), whereas the second timescale is prominent in networks with more local connectivity (Fig. 1A, local; Fig. 1D). Since neighboring columns share many of their recurrent inputs, the second timescale is also evident in cross-correlations (CC) between columns, and it becomes longer with increasing distance between columns.

To test the model predictions, we analyzed 16-channel microelectrode array recordings of spiking activity from single columns in the primate area V4. During spontaneous activity, we observed two distinct timescales in columnar On-Off fluctuations (Fig. 1E). Two timescales were also present in CCs of neural activity on different channels within the same column. To examine how timescales depend on horizontal cortical distance, we leveraged the fact that columnar recordings generally exhibit slight horizontal shifts due to variability in the penetration angle. As a surrogate for horizontal displacements between pairs of channels, we used distances between centers of their receptive fields (RF). As predicted by the model, the second timescale in CCs became longer with increasing RF-center distance. Our results suggest that timescales of local On-Off fluctuations in single cortical columns provide information about the underlying spatial network structure of the cortex.

F3 Internal bias controls phasic but not delay-period dopamine activity in a parametric working memory task

Néstor Parga1, Stefania Sarno1, Manuel Beiran2, José Vergara3, Román Rossi-Pool3, Ranulfo Romo3

1Universidad Autónoma Madrid, Madrid, Spain; 2Ecole Normale Supérieure, Department of Cognitive Studies, Paris, France; 3Universidad Nacional Autónoma México, Instituto de Fisiología Celular, México DF, Mexico

Correspondence: Néstor Parga (nestor.parga@uam.es)

BMC Neuroscience 2019, 20(Suppl 1):F3

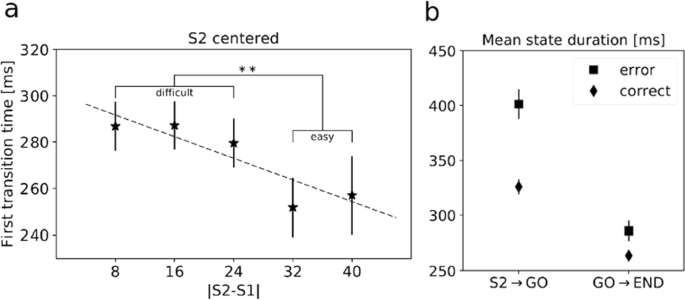

Dopamine (DA) has been implied in coding reward prediction errors (RPEs) and in several other phenomena such as working memory and motivation to work for reward. Under uncertain stimulation conditions DA phasic responses to relevant task cues reflect cortical perceptual decision-making processes, such as the certainty about stimulus detection and evidence accumulation, in a way compatible with the RPE hypothesis [1, 2]. This suggests that the midbrain DA system receives information from cortical circuits about decision formation and transforms it into an RPE signal. However, it is not clear how DA neurons behave when making a decision involves more demanding cognitive features, such as working memory and internal biases, or how they reflect motivation under uncertain conditions. To advance knowledge on these issues we have recorded and analyzed the firing activity of putatively midbrain DA neurons, while monkeys discriminated the frequencies of two vibrotactile stimuli delivered to one fingertip. This two-interval forced choice task, in which both stimuli were selected randomly in each trial, has been widely used to investigate perception, working memory and decision-making in sensory and frontal areas [3]; the current study adds to this scenario possible roles of midbrain DA neurons.

We found that the DA responses to the stimuli were not monotonically tuned to their frequency values. Instead they were controlled by an internally generated bias (contraction bias). This bias induced a subjective difficulty that modulated those responses as well as the accuracy and the response times (RTs). A Bayesian model for the choice explained the bias and gave a measure of the animal’s decision confidence, which also appeared modulated by the bias. We also found that the DA activity was above baseline throughout the delay (working memory) period. Interestingly, this activity was neither tuned to the first frequency nor controlled by the internal bias. While the phasic responses to the task events could be described by a reinforcement learning model based on belief states, the ramping behavior exhibited during the delay period could not be explained by standard models. Finally, the DA responses to the stimuli in short-RT trials and long-RTs trials were significantly different; interpreting the RTs as a measure of motivation, our analysis indicated that motivation affected strongly the responses to the task events but had only a weak influence on the DA activity during the delay interval. To summarize, our results show for the first time that an internal phenomenon (the bias) can control the DA phasic activity similar to the way physical differences in external stimuli do. We also encountered a ramping DA activity during the working memory period, independent of the memorized frequency value. Overall, our study supports the notion that delay and phasic DA activities accomplish quite different functions.

References

-

1.

Sarno S, de Lafuente V, Romo R, Parga N. Dopamine reward prediction error signal codes the temporal evaluation of a perceptual decision report. PNAS. 201712479 (2017)

-

2.

Lak A, Nomoto K, Keramati M, Sakagami M, Kepecs A. Midbrain dopamine neurons signal belief in choice accuracy during a perceptual decision. Curr Bio 27, 821–832 (2017)

-

3.

Romo R, Brody CD, Hernández A, Lemus L. Neuronal correlates of parametric working memory in the prefrontal cortex. Nature 399, 470–473 (1999)

O1 Representations of dissociated shape and category in deep Convolutional Neural Networks and human visual cortex

Astrid Zeman, J Brendan Ritchie, Stefania Bracci, Hans Op de Beeck

KULeuven, Brain and Cognition, Leuven, Belgium

Correspondence: Astrid Zeman (astrid.zeman@kuleuven.be)

BMC Neuroscience 2019, 20(Suppl 1):O1

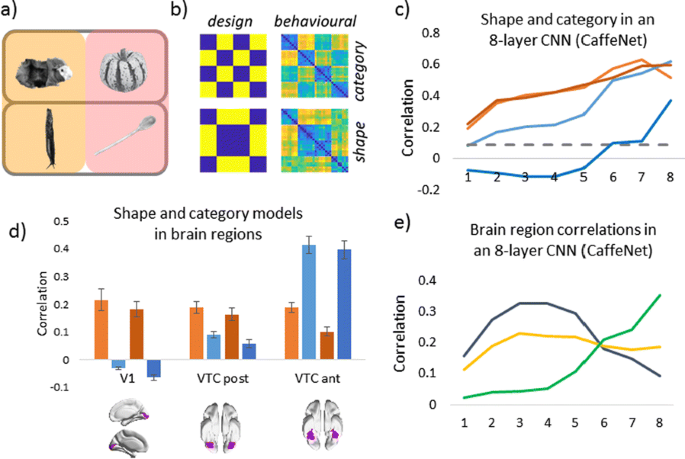

Deep Convolutional Neural Networks (CNNs) excel at object recognition and classification, with accuracy levels that now exceed humans [1]. In addition, CNNs also represent clusters of object similarity, such as the animate-inanimate division that is evident in object-selective areas of human visual cortex [2]. CNNs are trained using natural images, which contain shape and category information that is often highly correlated [3]. Due to this potential confound, it is therefore possible that CNNs rely upon shape information, rather than category, to classify objects. We investigate this possibility by quantifying the representational correlations of shape and category along the layers of multiple CNNs, with human behavioural ratings of these two factors, using two datasets that explicitly orthogonalize shape from category [3, 4] (Fig. 1a, b, c). We analyse shape and category representations along the human ventral pathway areas using fMRI (Fig. 1d) and measure correlations between artificial with biological representations by comparing the output from CNN layers with fMRI activation in ventral areas (Fig. 1e).

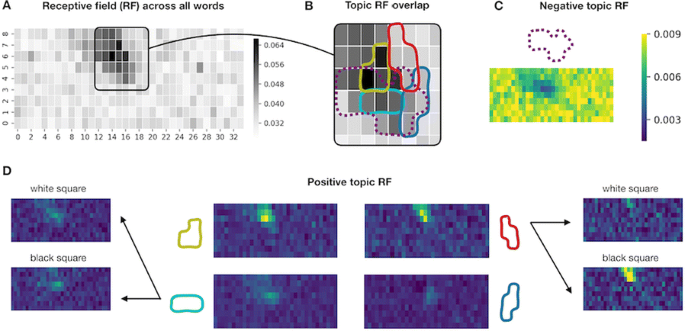

Shape and category models in CNNs vs the brain. a Example stimuli b Design and behavioral models c Shape (orange) and category (blue) correlations in CNNs. Behavioral (darker) and design (lighter) models. Only one CNN shown. d Shape (orange) and category (blue) correlations in ventral brain regions. e V1 (blue), posterior (yellow) and anterior (green) VTC correlated with CNN layers

First, we find that CNNs encode object category independently from shape, which peaks at the final fully connected layer for all network architectures. At the initial layer of all CNNs, shape is represented significantly above chance in the majority of cases (94%), whereas category is not. Category information only increases above the significance level in the final few layers of all networks, reaching a maximum at the final layer after remaining low for the majority of layers. Second, by using fMRI to analyse shape and category representations along the ventral pathway, we find that shape information decreases from early visual cortex (V1) to the anterior portion of ventral temporal cortex (VTC). Conversely, category information increases from low to high from V1 to anterior VTC. This two-way interaction is significant for both datasets, demonstrating that this effect is evident for both low-level (orientation dependent) and high-level (low vs high aspect ratio) definitions of shape. Third, comparing CNNs with brain areas, the highest correlation with anterior VTC occurs at the final layer of all networks. V1 correlations reach a maximum prior to fully connected layers, at early, mid or late layers, depending upon network depth. In all CNNs, the order of maximum correlations with neural data corresponds well with the flow of visual information along the visual pathway. Overall, our results suggest that CNNs represent category information independently from shape, similarly to human object recognition processing.

References

-

1.

He K, Zhang X, Ren S, Sun J. Delving Deep into Rectifiers: Surpassing Human-Level Performance on ImageNet Classification. 2015 IEEE International Conference on Computer Vision (ICCV), Santiago 2015, pp 1026–1034.

-

2.

Khaligh-Razavi S-M, Kriegeskorte N. Deep Supervised, but Not Unsupervised, Models May Explain IT Cortical Representation. PLoS Computational Biology 2014, 10(11), e1003915.

-

3.

Bracci S, Op de Beeck H. Dissociations and Associations between Shape and Category. J Neurosci 2016, 36(2), 432–444.

-

4.

Ritchie JB, Op de Beeck H. Using neural distance to predict reaction time for categorizing the animacy, shape, and abstract properties of objects. BioRxiv 2018. Preprint at: https://doi.org/10.1101/496539

O2 Discovering the building blocks of hearing: a data-driven, neuro-inspired approach

Lotte Weerts1, Claudia Clopath2, Dan Goodman1

1Imperial College London, Electrical and Electronic Engineering, London, United Kingdom; 2Imperial College London, Department of Bioengineering, London, United Kingdom

Correspondence: Dan Goodman (d.goodman@imperial.ac.uk)

BMC Neuroscience 2019, 20(Suppl 1):O2

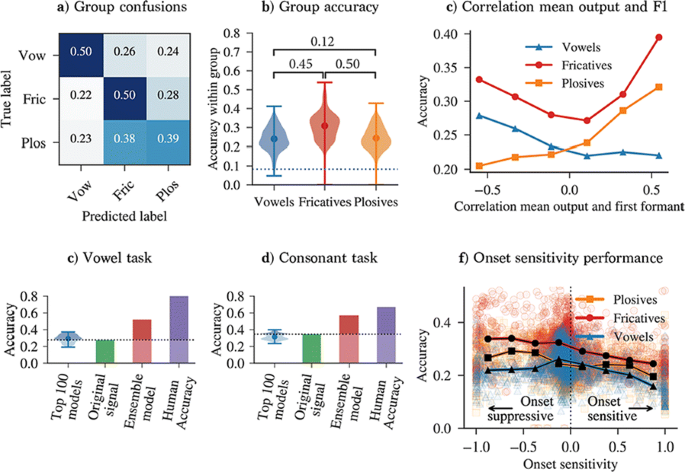

Our understanding of hearing and speech recognition rests on controlled experiments requiring simple stimuli. However, these stimuli often lack the variability and complexity characteristic of complex sounds such as speech. We propose an approach that combines neural modelling with data-driven machine learning to determine auditory features that are both theoretically powerful and can be extracted by networks that are compatible with known auditory physiology. Our approach bridges the gap between detailed neuronal models that capture specific auditory responses, and research on the statistics of real-world speech data and its relationship to speech recognition. Importantly, our model can capture a wide variety of well studied features using specific parameter choices, and allows us to unify several concepts from different areas of hearing research.

We introduce a feature detection model with a modest number of parameters that is compatible with auditory physiology. We show that this model is capable of detecting a range of features such as amplitude modulations (AMs) and onsets. In order to objectively determine relevant feature detectors within our model parameter space, we use a simple classifier that approximates the information bottleneck, a principle grounded in information theory that can be used to define which features are “useful”. By analysing the performance in a classification task, our framework allows us to determine the best model parameters and their neurophysiological implications and relate those to psychoacoustic findings.

We analyse the performance of a range of model variants in a phoneme classification task (Fig. 1). Some variants improve accuracy compared to using the original signal, indicating that our feature detection model extracts useful information. By analysing the properties of high performing variants, we rediscover several proposed mechanisms for robust speech processing. Firstly, our result suggest that model variants that can detect and distinguish between formants are important for phoneme recognition. Secondly, we rediscover the importance of AM sensitivity for consonant recognition, which is in line with several experimental studies that show that consonant recognition is degraded when certain amplitude modulations are removed. Besides confirming well-known mechanisms, our analysis hints at other less-established ideas, such as the importance of onset suppression. Our results indicate that onset suppression can improve phoneme recognition, which is in line with the hypothesis that the suppression of onset noise (or “spectral splatter”), as observed in the mammalian auditory brainstem, can improve the clarity of a neural harmonic representation. We also discover model variants that are responsive to more complex features, such as combined onset and AM sensitivity. Finally, we show how our approach lends itself to be extended to more complex environments, by distorting the clean speech signal with noise.

a Between-group confusion matrix for best parameters. b distribution of within-group accuracies and between-group accuracy correlations. c Within-group accuracy and correlation of model output and spectral peaks. d, e Accuracy achieved with model variants, the original filtered signal, and ensemble models on a vowel (d) and consonant (e) task. f Within-group accuracy versus onset strength

Our approach has various potential applications. Firstly, it could lead to new, testable experimental hypotheses for understanding hearing. Moreover, promising features could be directly applied as a new acoustic front-end for speech recognition systems.

Acknowledgments: This work was partly supported by a Titan Xp donated by the NVIDIA Corporation, The Royal Society grant RG170298 and the Engineering and Physical Sciences Research Council (grant number EP/L016737/1).

O3 Modeling stroke and rehabilitation in mice using large-scale brain networks

Spase Petkoski1, Anna Letizia Allegra Mascaro2, Francesco Saverio Pavone2, Viktor Jirsa1

1Aix-Marseille Université, Institut de Neurosciences des Systèmes, Marseille, France; 2University of Florence, European Laboratory for Non-linear Spectroscopy, Florence, Italy

Correspondence: Spase Petkoski (spase.petkoski@univ-amu.fr)

BMC Neuroscience 2019, 20(Suppl 1):O3

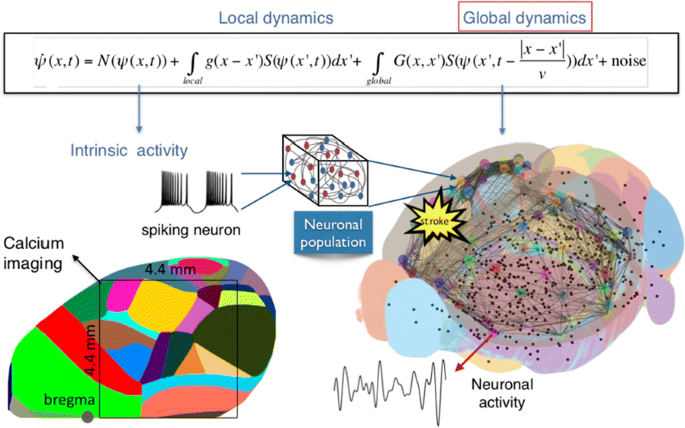

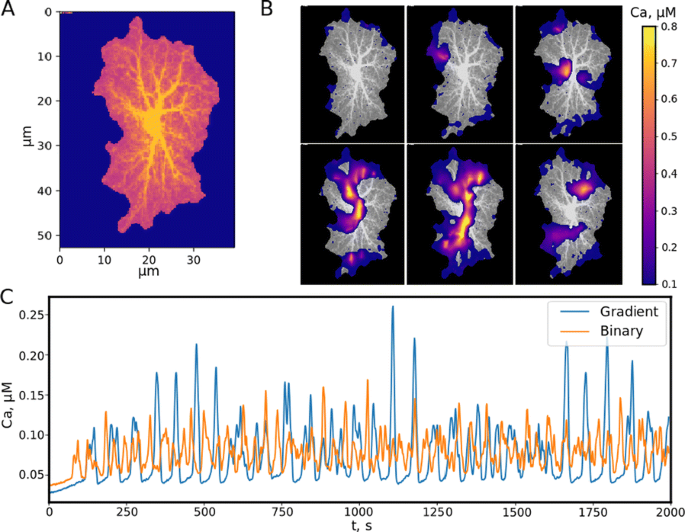

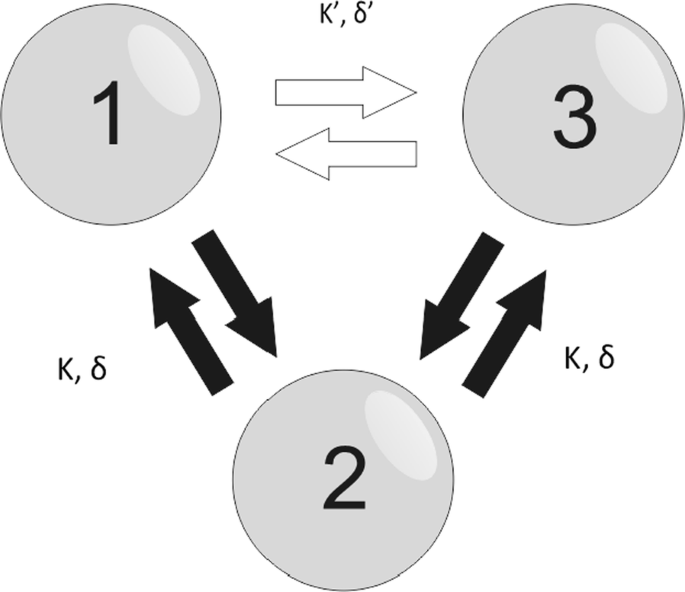

Individualized large-scale computational modeling of the dynamics associated with the brain pathologies [1] is an emerging approach in the clinical applications, which gets validation through animal models. A good candidate for confirmation of brain network causality is stroke and the subsequent recovery, which alter brain’s structural connectivity, and this is then reflected on functional and behavioral level. In this study we use large-scale brain network model (BNM) to computationally validate the structural changes due to stroke and recovery in mice, and their impact on the resting state functional connectivity (FC), as captured by wide-field calcium imaging.

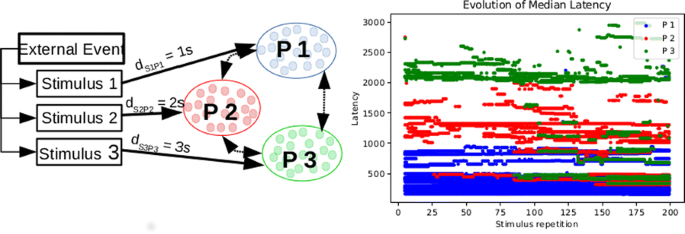

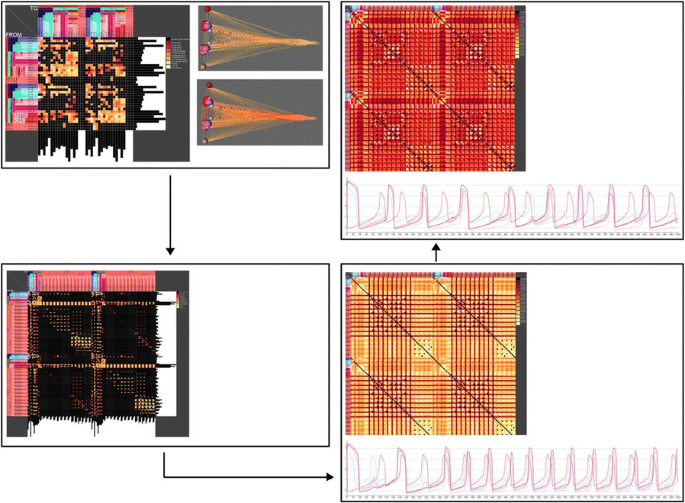

We built our BNM based on the detailed Allen Mouse (AM) connectome that is implemented in The Virtual Mouse Brain [2]. It dictates the strength of the couplings between distant brain regions based on tracer data. The homogeneous local connectivity is absorbed into the neuronal mass model that is generally derived from mean activity of populations of spiking neurons, Fig. 1, and is here represented by the Kuramoto oscillators [3], as canonical model for network synchronization due to weak interactions. The photothrombotic focal stroke affects the right primary motor cortex (rM1). The injured forelimb is daily trained on a custom designed robotic device (M-Platform, [4, 5]) from 5 days after the stroke for a total of 4 weeks. The stroke is modeled by different levels of damage of the links connecting rM1, while the recovery is represented by reinforcing of alternative connections of the nodes initially linked to it [6]. We systematically simulate various impacts of stroke and recovery, to find the best match with the coactivation patterns in the data, where the FC is characterized with the phase coherence calculated for the phases of Hilbert transformed delta frequency activity of pixels within separate regions [6]. Statistically significant changes within the FC of 5 animals are obtained for transitions between the three conditions: healthy, stroke and rehabilitation after 4 weeks of training, and these are compared with the best fits for each condition of the model in the parameter’s space of the global coupling strength and stroke impact and rewiring.

The equation of the mouse BNM shows that the spatiotemporal dynamics is shaped by the connectivity. The brain network (right) is reconstructed from the AMA, showing the centers of sub cortical (small black dots) and cortical (colored circles) regions. On the left, the field of view during the recordings is overlayed on the reconstructed brain, and different colors represent the cortical regions

This approach uncovers recovery paths in the parameter space of the dynamical system that can be related to neurophysiological quantities such as the white matter tracts. This can lead to better strategies for rehabilitation, such as stimulation or inhibition of certain regions and links that have a critical role on the dynamics of the recovery.

References

-

1.

Olmi S, Petkoski S, Guye M, Bartolomei F, Jirsa V. Controlling seizure propagation in large-scale brain networks. PLoS Comp Biol. [in press]

-

2.

Melozzi F, Woodman MM, Jirsa VK, Bernard C. The Virtual Mouse Brain: A computational neuroinformatics platform to study whole mouse brain dynamics. eNeuro 0111-17. 2017.

-

3.

Petkoski S, Palva JM, Jirsa VK. Phase-lags in large scale brain synchronization: Methodological considerations and in-silico analysis. PLoS Comp Biol, 14(7), 1–30. 2018.

-

4.

Spalletti C, et al. A robotic system for quantitative assessment and poststroke training of forelimb retraction in mice. Neurorehabilitation and neural repair 28, 188–196. 2014.

-

5.

Allegra Mascaro, A et al. Rehabilitation promotes the recovery of distinct functional and structural features of healthy neuronal networks after stroke. [under review].

-

6.

Petkoski S, et al. Large-scale brain network model for stroke and rehabilitation in mice. [in prep].

O4 Self-consistent correlations of randomly coupled rotators in the asynchronous state

Alexander van Meegen1, Benjamin Lindner2

1Jülich Research Centre, Institute of Neuroscience and Medicine (INM-6) and Institute for Advanced Simulation (IAS-6), Jülich, Germany; 2Humboldt University Berlin, Physics Department, Berlin, Germany

Correspondence: Alexander van Meegen (a.van.meegen@fz-juelich.de)

BMC Neuroscience 2019, 20(Suppl 1):O4

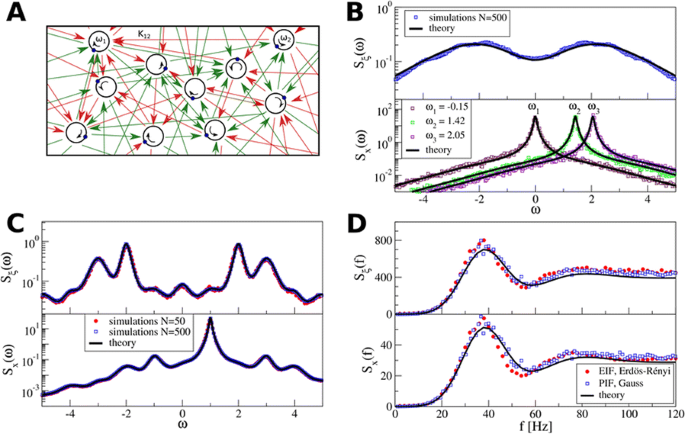

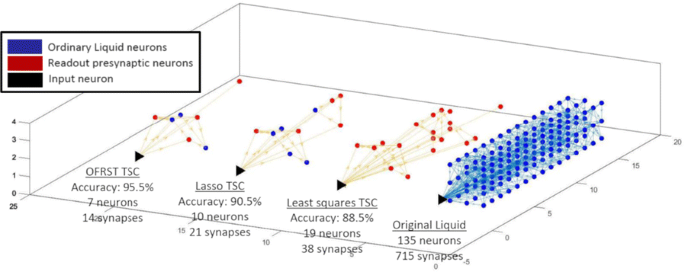

Spiking activity of cortical neurons in behaving animals is highly irregular and asynchronous. The quasi stochastic activity (the network noise) does not seem to root in the comparatively weak intrinsic noise sources but is most likely due to the nonlinear chaotic interactions in the network. Consequently, simple models of spiking neurons display similar states, the theoretical description of which has turned out to be notoriously difficult. In particular, calculating the neuron’s correlation function is still an open problem. One classical approach pioneered in the seminal work of Sompolinsky et al. [1] used analytically tractable rate units to obtain a self-consistent theory of the network fluctuations and the correlation function of the single unit in the asynchronous irregular state. Recently, the original model attracted renewed interest, leading to substantial extensions and a wide range of novel results [2–5].

Here, we develop a theory for a heterogeneous random network of unidirectionally coupled phase oscillators [6]. Similar to Sompolinsky’s rate-unit model, the system can attain an asynchronous state with pronounced temporal autocorrelations of the units. The model can be examined analytically and even allows for closed-form solutions in simple cases. Furthermore, with a small extension, it can mimic mean-driven networks of spiking neurons and the theory can be extended to this case accordingly.

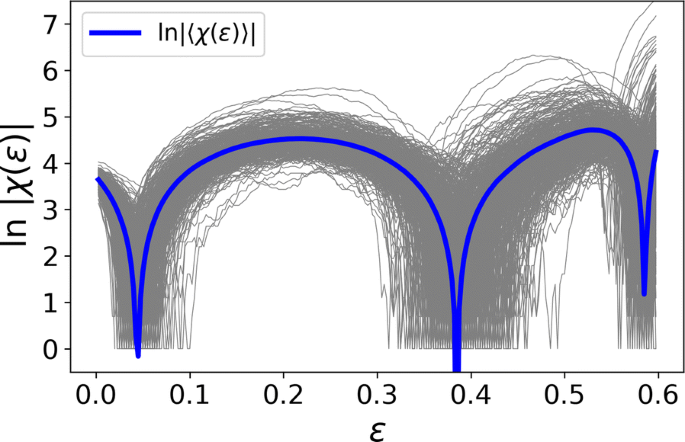

Specifically, we derived a differential equation for the self-consistent autocorrelation function of the network noise and of the single oscillators. Its numerical solution has been confirmed by simulations of sparsely connected networks (Fig. 1). Explicit expressions for correlation functions and power spectra for the case of a homogeneous network (identical oscillators) can be obtained in the limits of weak or strong coupling strength. To apply the model to networks of sparsely coupled excitatory and inhibitory exponential integrate-and-fire (IF) neurons, we extended the coupling function and derived a second differential equation for the self-consistent autocorrelations. Deep in the mean-driven regime of the spiking network, our theory is in excellent agreement with simulations results of the sparse network.

Sketch of a random network of phase oscillators. a Self-consistent power spectra of network noise and single units (b–d), upper and lower plots respectively) obtained from simulations (colored symbols) compared with the theory (black lines): Heterogeneous b and homogeneous c networks of phase oscillators, and sparsely coupled IF networks (d). Panels b–d adapted and modified from [6]

This work paves the way for more detailed studies of how the statistics of connection strength, the heterogeneity of network parameters, and the form of the interaction function shape the network noise and the autocorrelations of the single element in asynchronous irregular state.

References

-

1.

Sompolinsky H, Crisanti A, Sommers HJ. Chaos in random neural networks. Physical review letters 1988 Jul 18;61(3):259.

-

2.

Kadmon J, Sompolinsky H. Transition to chaos in random neuronal networks. Physical Review X 2015 Nov 19;5(4):041030.

-

3.

Mastrogiuseppe F, Ostojic S. Linking connectivity, dynamics, and computations in low-rank recurrent neural networks. Neuron 2018 Aug 8;99(3):609-23.

-

4.

Schuecker J, Goedeke S, Helias M. Optimal sequence memory in driven random networks. Physical Review X 2018 Nov 14;8(4):041029.

-

5.

Muscinelli SP, Gerstner W, Schwalger T. Single neuron properties shape chaotic dynamics in random neural networks. arXiv preprint arXiv:1812.06925 2018 Dec 17.

-

6.

van Meegen A, Lindner B. Self-Consistent Correlations of Randomly Coupled Rotators in the Asynchronous State. Physical review letters 2018 Dec 20;121(25):258302.

O5 Firing rate-dependent phase responses dynamically regulate Purkinje cell network oscillations

Yunliang Zang, Erik De Schutter

Okinawa Institute of Science and Technology, Computational Neuroscience Unit, Onna-Son, Japan

Correspondence: Yunliang Zang (zangyl1983@gmail.com)

BMC Neuroscience 2019, 20(Suppl 1):O5

Phase response curves (PRCs) have been defined to quantify how a weak stimulus shift the next spike timing in regular firing neurons. However, the biophysical mechanisms that shape the PRC profiles are poorly understood. The PRCs in Purkinje cells (PCs) show firing rate (FR) adaptation. At low FRs, the responses are small and phase independent. At high FRs, the responses become phase dependent at later phases, with their onset phases gradually left-shifted and peaks gradually increased, due to an unknown mechanism [1, 2].

Using our recently developed compartment-based PC model [3], we reproduced the FR-dependence of PRCs and identified the depolarized interspike membrane potential as the mechanism underlying the transition from phase-independent responses at low FRs to the gradually left-shifted phase-dependent responses at high FRs. We also demonstrated this mechanism plays a general role in shaping PRC profiles in other neurons.

PC axon collaterals have been proposed to correlate temporal spiking in PC ensembles [4, 5], but whether and how they interact with the FR-dependent PRCs to regulate PC output remains unexplored. We built a recurrent inhibitory PC-to-PC network model to examine how FR-dependent PRCs regulate the synchrony of high frequency (~ 160 Hz) oscillations observed in vivo [4]. We find the synchrony of these oscillations increases with FR due to larger and broader PRCs at high FRs. This increased synchrony still holds when the network incorporates dynamically and heterogeneously changing cellular FRs. Our work implies that FR-dependent PRCs may be a critical property of the cerebellar cortex in combining rate- and synchrony-coding to dynamically organize its temporal output.

References

-

1.

Phoka E., et al., A new approach for determining phase response curves reveals that Purkinje cells can act as perfect integrators. PLoS Comput. Biol 2010. 6(4): p. e1000768.

-

2.

Couto J., et al., On the firing rate dependency of the phase response curve of rat Purkinje neurons in vitro. PLoS Comput. Biol 2015. 11(3): p. e1004112.

-

3.

Zang Y, Dieudonne S, De Schutter E. Voltage- and Branch-Specific Climbing Fiber Responses in Purkinje Cells. Cell Rep 2018. 24(6): p. 1536-1549.

-

4.

de Solages C., et al., High-frequency organization and synchrony of activity in the purkinje cell layer of the cerebellum. Neuron 2008. 58(5): p. 775-88.

-

5.

Witter L., et al., Purkinje Cell Collaterals Enable Output Signals from the Cerebellar Cortex to Feed Back to Purkinje Cells and Interneurons. Neuron 2016. 91(2): p. 312-9.

O6 Computational modeling of brainstem-spinal circuits controlling locomotor speed and gait

Ilya Rybak, Jessica Ausborn, Simon Danner, Natalia Shevtsova

Drexel University College of Medicine, Department of Neurobiology and Anatomy, Philadelphia, PA, United States of America

Correspondence: Ilya Rybak (rybak@drexel.edu)

BMC Neuroscience 2019, 20(Suppl 1):O6

Locomotion is an essential motor activity allowing animals to survive in complex environments. Depending on the environmental context and current needs quadruped animals can switch locomotor behavior from slow left-right alternating gaits, such as walk and trot (typical for exploration), to higher-speed synchronous gaits, such as gallop and bound (specific for escape behavior). At the spinal cord level,the locomotor gait is controlled by interactions between four central rhythm generators (RGs) located on the left and right sides of the lumbar and cervical enlargements of the cord, each producing rhythmic activity controlling one limb. The activities of the RGs are coordinated by commissural interneurons (CINs), projecting across the midline to the contralateral side of the cord, and long propriospinal neurons (LPNs), connecting the cervical and lumbar circuits. At the brainstem level, locomotor behavior and gaitsare controlled by two majorbrainstem nuclei: the cuneiform (CnF) and the pedunculopontine (PPN) nuclei [1]. Glutamatergic neurons in both nuclei contribute to the control of slow alternating-gait movements, whereas only activation of CnF can elicit high-speed synchronous-gait locomotion. Neurons from both regions project to the spinal cord via descendingreticulospinal tracts from thelateral paragigantocellular nuclei (LPGi) [2].

To investigate the brainstem control of spinal circuits involved in the slow exploratory and fast escape locomotion, we built a computational model ofthe brainstem-spinal circuits controlling these locomotor behaviors. The spinal cord circuits in the modelincluded four RGs (one per limb) interacting via cervical and lumbar CINs and LPNs. The brainstem model incorporated bilaterally interacting CnF and PPN circuits projecting to the LPGi nuclei that mediated the descending pathways to the spinal cord.These pathways provided excitation of all RGs to control locomotor frequency and inhibited selected CINs and LPNs, which allowed the model to reproduce the speed-dependent gait transitions observed in intact mice and the loss of particular gaits in mutants lacking some genetically identified CINs [3].The proposed structure of synaptic inputs of the descending (LPGi) pathways to the spinal CINs and LPNs allowed the model to reproduce the experimentally observed effects of stimulation of excitatory and inhibitory neurons within CnF, PPN, and LPGi. The suggests explanations for (a) the speed-dependent expression of different locomotor gaits and the role of different CINs and LPNs in gait transitions, (b) the involvement of the CnF and PPN nuclei in the control of low-speed alternating-gait locomotion and the specific role of the CnF in the control of high-speed synchronous-gait locomotion, and (c) the role of inhibitory neurons in these areas in slowing down and stopping locomotion. The model provides important insights into the brainstem-spinal cord interactions and the brainstem control of locomotor speed and gaits.

References

-

1.

Caggiano V, Leiras R, Goñi-Erro H, et al. Midbrain circuits that set locomotor speed and gait selection. Nature 2018, 553, 455–460.

-

2.

Capelli P, Pivetta C, Esposito MS, Arber S. Locomotor speed control circuits in the caudal brainstem. Nature 2017, 551, 373–377.

-

3.

Bellardita C, Kiehn O. Phenotypic characterization of speed-associated gait changes in mice reveals modular organization of locomotor networks. Curr Biol 2015, 25, 1426–1436.

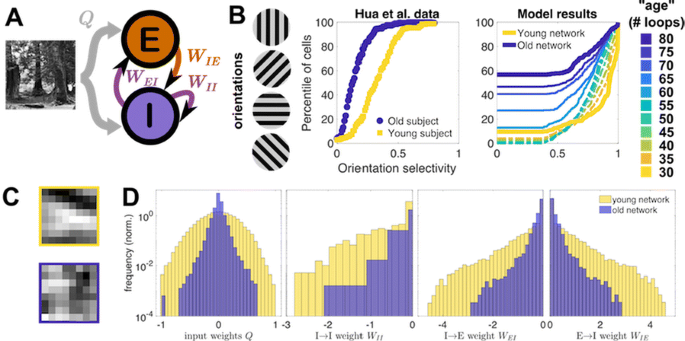

O7 Co-refinement of network interactions and neural response properties in visual cortex

Sigrid Trägenap1, Bettina Hein1, David Whitney2, Gordon Smith3, David Fitzpatrick2, Matthias Kaschube1

1Frankfurt Institute for Advanced Studies (FIAS), Department of Neuroscience, Frankfurt, Germany; 2Max Planck Florida Institute, Department of Neuroscience, Jupiter, FL, United States of America; 3University of Minnesota, Department of Neuroscience, Minneapolis, MN, United States of America

Correspondence: Sigrid Trägenap (traegenap@fias.uni-frankfurt.de)

BMC Neuroscience 2019, 20(Suppl 1):O7

In the mature visual cortex, local tuning properties are linked through distributed network interactions with a remarkable degree of specificity [1]. However, it remains unknown whether the tight linkage between functional tuning and network structure is an intrinsic feature of cortical circuits, or instead gradually emerges in development. Combining virally-mediated expression of GCAMP6s in pyramidal neurons with wide-field epifluorescence imaging in ferret visual cortex, we longitudinally monitored the spontaneous activity correlation structure—our proxy for intrinsic network interactions- and the emergence of orientation tuning around eye-opening.

We find that prior to eye-opening, the layout of emerging iso-orientation domains is only weakly similar to the spontaneous correlation structure. Nonetheless within one week of visual experience, the layout of iso-orientation domains and the spontaneous correlation structure become rapidly matched. Motivated by these observations, we developed dynamical equations to describe how tuning and network correlations co-refine to become matched with age. Here we propose an objective function capturing the degree of consistency between orientation tuning and network correlations. Then by gradient descent of this objective function, we derive dynamical equations that predict an interdependent refinement of orientation tuning and network correlations. To first approximation, these equations predict that correlated neurons become more similar in orientation tuning over time, while network correlations follow a relaxation process increasing the degree of self-consistency in their link to tuning properties.

Empirically, we indeed observe a refinement with age in both orientation tuning and spontaneous correlations. Furthermore, we find that this framework can utilize early measurements of orientation tuning and correlation structure to predict aspects of the future refinement in orientation tuning and spontaneous correlations. We conclude that visual response properties and network interactions show a considerable degree of coordinated and interdependent refinement towards a self-consistent configuration in the developing visual cortex.

Reference

-

1.

Smith GB, Hein B, Whitney DE, Fitzpatrick D, Kaschube M. Distributed network interactions and their emergence in developing neocortex. Nature Neuroscience 2018 Nov;21(11):1600.

O8 Receptive field structure of border ownership-selective cells in response to direction of figure

Ko Sakai1, Kazunao Tanaka1, Rüdiger von der Heydt2, Ernst Niebur3

1University of Tsukuba, Department of Computer Science, Tsukuba, Japan; 2Johns Hopkins University, Krieger Mind/Brain Institute, Baltimore, United States of America; 3Johns Hopkins, Neuroscience, Baltimore, MD, United States of America

Correspondence: Ko Sakai (sakai@cs.tsukuba.ac.jp)

BMC Neuroscience 2019, 20(Suppl 1):O8

The responses of border ownership-selective cells (BOCs) have been reported to signal the direction of figure (DOF) along the contours in natural images with a variety of shapes and textures [1]. We examined the spatial structure of the optimal stimuli for BOCs in monkey visual cortical area V2 to determine the structure of the receptive field. We computed the spike triggered average (STA) from responses of the BOCs to natural images (JHU archive, http://dx.doi.org/10.7281/T1C8276W). To estimate the STA in response to figure-ground organization of natural images, we tagged figure regions with luminance contrast. The left panel in Fig 1 illustrates the procedure for STA computation. We first aligned all images to a given cell’s preferred orientation and preferred direction of figure. We then grouped the images based on the luminance contrast of their figure regions with respect to their ground regions, and averaged them separately for each group. By averaging the bright-figure stimuli with weights based on each cell’s spike count, we were able to observe the optimal figure and ground sub-regions as brighter and darker regions, respectively. By averaging the dark-figure stimuli, we obtained the reverse. We then generated the STA by subtracting the average of the dark-figure stimuli from that of the bright-figure stimuli. This subtraction canceled out the dependence of response to contrast. We compensated for the bias due to the non-uniformity of luminance in the natural images by subtracting the simple ensemble average of the stimuli (equivalent to weight = 1 for all stimuli) from the weighted average. The mean STA across 22 BOCs showed facilitated and suppressed sub-regions in response to the figure towards the preferred and non-preferred DOFs, respectively (Fig. 1, the right panel). The structure was shown more clearly when figure and ground were replaced by a binary mask. The result demonstrates, for the first time, the antagonistic spatial structure in the receptive field of BOCs in response to figure-ground organization.

(Left) We tagged figure regions with luminance contrast to compute the STA in response to figure-ground organization. Natural images with bright foreground were weighted by the cell’s spike counts and summed. The analogue was computed for scenes with dark foregrounds and the difference taken. (Right) The computed STA across 22 cells revealed antagonistic sub-regions

Acknowledgment: This work was partly supported by JSPS (KAKENHI, 26280047, 17H01754) and National Institutes of Health (R01EY027544 and R01DA040990).

Reference

-

1.

Williford JR, Von Der Heydt R. Figure-ground organization in visual cortex for natural scenes. eNeuro 2016 Nov; 3(6) 1–15

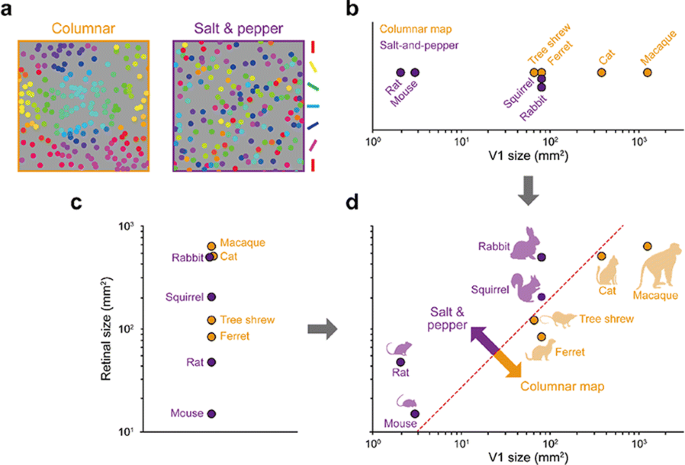

O9 Development of periodic and salt-and-pepper orientation maps from a common retinal origin

Min Song, Jaeson Jang, Se-Bum Paik

Korea Advanced Institute of Science and Technology, Department of Biology and Brain Engineering, Daejeon, South Korea

Correspondence: Min Song (night@kaist.ac.kr)

BMC Neuroscience 2019, 20(Suppl 1):O9

Spatial organization of orientation tuning in the primary visual cortex (V1) is arranged in different forms across mammalian species. In some species (e.g. monkeys or cats), the preferred orientation continuously changes across the cortical surface (columnar orientation map), while other species (e.g. mice or rats) have a random-like distribution of orientation preference, termed salt-and-pepper organization. However, it still remains unclear why the organization of the cortical circuit develops differently across species. Previously, it was suggested that each type of circuit might be a result of wiring optimization under different conditions of evolution [1], but the developmental mechanism of each organization of orientation tuning still remains unclear. In this study, we propose that the structural variations between cortical circuits across species simply arise from the differences in physical constraints of the visual system—the size of the retina and V1 (see Fig. 1). By expanding the statistical wiring model proposing that the orientation tuning of a V1 neuron is restricted by the local arrangement of ON and OFF retinal ganglion cells (RGCs) [2, 3], we suggest that the number of V1 neurons sampling a given RGC (sampling ratio) is a crucial factor in determining the continuity of orientation tuning in V1. Our simulation results show that as the sampling ratio increases, neighboring V1 neurons receive similar retinal inputs, resulting in continuous changes in orientation tuning. To validate our prediction, we estimated the sampling ratio of each species from the physical size of the retina and V1 [5] and compared with the organization of orientation tuning. As predicted, this ratio could successfully distinguish diverse mammalian species into two groups according to the organization of orientation tuning, even though the organization has not been clearly predicted by considering only a single factor in the visual system (e.g. V1 size or visual acuity; [4]). Our results suggest a common retinal origin of orientation preference across diverse mammalian species, while its spatial organization can vary depending on the physical constraints of the visual system.

References

-

1.

Kaschube M. Neural maps versus salt-and-pepper organization in visual cortex. Current opinion in neurobiology 2014, 24: 95-102.

-

2.

Ringach DL. “Haphazard wiring of simple receptive fields and orientation columns in visual cortex.” Journal of neurophysiology 2004, 92.1: 468-476.

-

3.

Ringach DL. On the origin of the functional architecture of the cortex. PloS one 2007, 2.2: e251.

-

4.

Van Hooser SD, et al. Orientation selectivity without orientation maps in visual cortex of a highly visual mammal. Journal of Neuroscience 2005, 25.1: 19-28.

-

5.

Colonnese MT, et al. A conserved switch in sensory processing prepares developing neocortex for vision. Neuron 2010, 67.3: 480-498.

O10 Explaining the pitch of FM-sweeps with a predictive hierarchical model

Alejandro Tabas1, Katharina von Kriegstein2

1Max Planck Institute for Human Cognitive and Brain Sciences, Research Group in Neural Mechanisms of Human Communication, Leipzig, Germany; 2Tesnische Universität Dresden, Chair of Clinical and Cognitive Neuroscience, Faculty of Psychology, Dresden, Germany

Correspondence: Alejandro Tabas (alextabas@gmail.com)

BMC Neuroscience 2019, 20(Suppl 1):O10

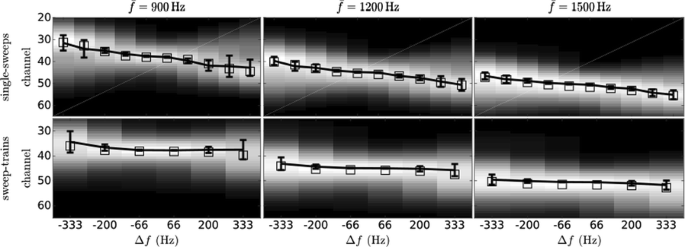

Frequency modulation (FM) is a basic constituent of vocalisation. FM-sweeps in the frequency range and modulation rates of speech have been shown to elicit a pitch percept that consistently deviates from the sweep average frequency [1]. Here, we use this perceptual effect to inform a model characterising the neural encoding of FM.

First, we performed a perceptual experiment where participants were asked to match the pitch of 30 sweeps with probe sinusoids of the same duration. The elicited pitch systematically deviated from the average frequency of the sweep by an amount that depended linearly on the modulation slope. Previous studies [2] have proposed that the deviance might be due to a fixed-sized-window integration process that fosters frequencies present at the end of the stimulus. To test this hypothesis, we conducted a second perceptual experiment considering the pitch elicited by continuous trains of five concatenated sweeps. As before, participants were asked to match the pitch of the sweep trains with probe sinusoids. Our results showed that the pitch deviance from the mean observed in sweeps was severely reduced in the train stimuli, in direct contradiction with the fixed-sized-integration-window hypothesis.

The perceptual effects may also stem from unexpected interactions between the frequencies spanned in the stimuli during pitch processing. We studied this posibility in two well-established families of mechanistic models of pitch. First, we considered a general spectral model that computes pitch as the expected value of the activity distribution across the cochlear decomposition. Due to adaptation effects, this model fostered the spectral range present at the beginning of the sweep: the exact opposite of what we observed in the experimental data. Second, we considered the predictions of the summary autocorrelation function (SACF) [3], a prototypical model of temporal pitch processing that considers the temporal structure of the auditory nerve activity. The SACF was unable to integrate temporal pitch information quickly enough to keep track of the modulation rate, yielding inconsistent pitch predictions that deviated stochastically from the average frequency.

Here, we introduce an alternative hypothesis based on top-down facilitation. Top-down efferents constitute an important fraction of the fibres in the auditory nerve; moreover, top-down predictive facilitation may reduce the metabolic cost and increase the speed of the neural encoding of expected inputs. Our model incorporates a second layer of neurons encoding FM direction that, after detecting that the incoming inputs are consistent with a rising (falling) sweep, anticipate that neurons encoding immediately higher (lower) frequencies will activate next. This prediction is propagated downwards to neurons encoding such frequencies, increasing their readiness and effectively inflating their weight during pitch temporal integration.

The described mechanism fully reproduces our and previously published experimental results (Fig. 1). We conclude that top-down predictive modulation plays an important role in the neural encoding of frequency modulation even at early stages of the processing hierarchy.

References

-

1.

d’Alessandro C, Castellengo M. The pitch of short‐duration vibrato tones. The Journal of the Acoustical Society of America 1994 Mar;95(3):1617-30.

-

2.

Brady PT, House AS, Stevens KN. Perception of sounds characterized by a rapidly changing resonant frequency. The Journal of the Acoustical Society of America 1961 Oct;33(10):1357-62.

-

3.

Meddis R, O’Mard LP. Virtual pitch in a computational physiological model. The Journal of the Acoustical Society of America 2006 Dec;120(6):3861-9.

O11 Effects of anesthesia on coordinated neuronal activity and information processing in rat primary visual cortex

Heonsoo Lee, Shiyong Wang, Anthony Hudetz

University of Michigan, Anesthesiology, Ann Arbor, MI, United States of America

Correspondence: Heonsoo Lee (heonslee@umich.edu)

BMC Neuroscience 2019, 20(Suppl 1):O11

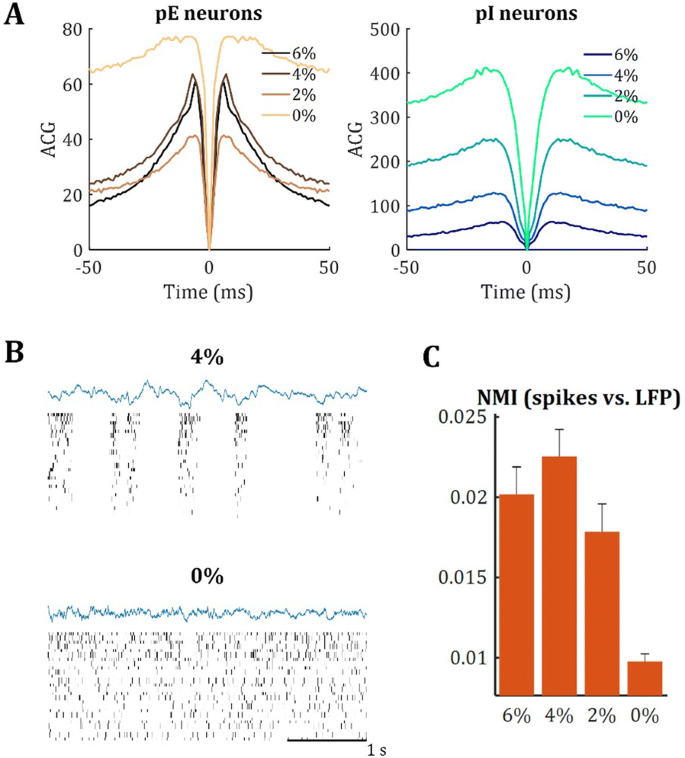

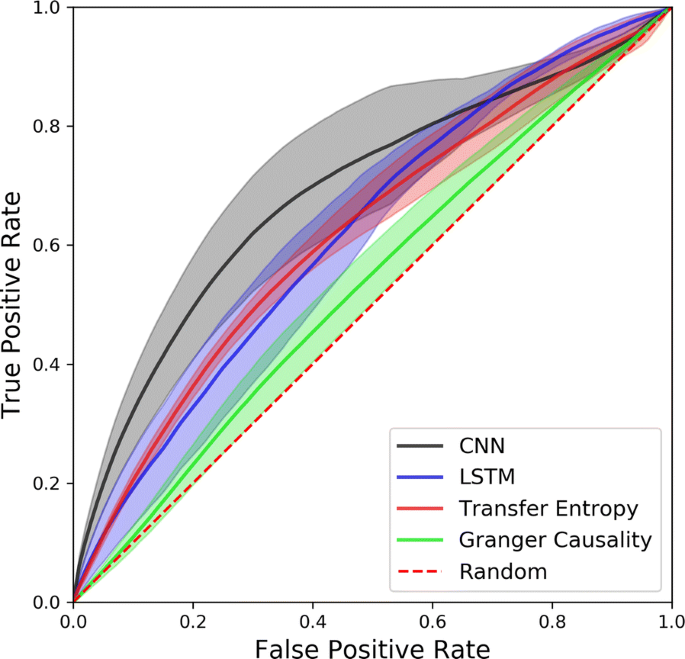

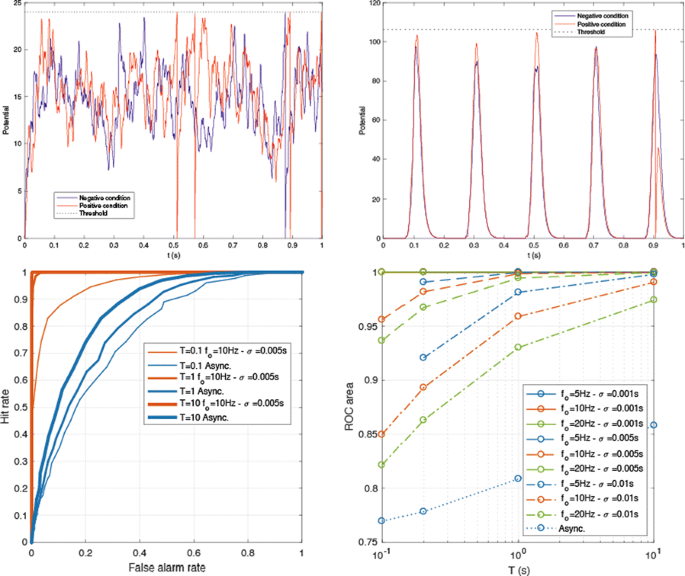

Introduction: Understanding of how anesthesia affects neural activity is important to reveal the mechanism of loss and recovery of consciousness. Despite numerous studies during the past decade, how anesthesia alters spiking activity of different types of neurons and information processing within an intact neural network is not fully understood. Based on prior in vitro studies we hypothesized that excitatory and inhibitory neurons in neocortex are differentially affected by anesthetic. We also predicted that individual neurons are constrained to population activity, leading to impaired information processing within a neural network.

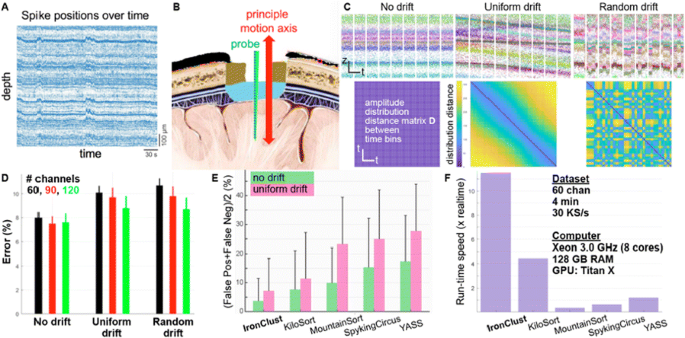

Methods: We implanted sixty-four-contact microelectrode arrays in primary visual cortex (layer 5/6, contacts spanning 800 µm depth and 1600 µm width) for recording of extracellular unit activity at three steady-state levels of anesthesia (6, 4 and 2% desflurane) and wakefulness (number of rats = 8). Single unit activities were extracted and putative excitatory and inhibitory neurons were identified based on their spike waveforms and autocorrelogram characteristics (number of neurons = 210). Neuronal features such as firing rate, interspike interval (ISI), bimodality, and monosynaptic spike transmission probabilities were investigated. Normalized mutual information and transfer entropy were also applied to investigate the interaction between spike trains and population activity (local field potential; LFP).

Results: First, anesthesia significantly altered characteristics of individual neurons. Firing rate of most neurons was reduced; this effect was more pronounced in inhibitory neurons. Excitatory neurons showed enhanced bursting activity (ISI<9 ms) and silent periods (hundreds of milliseconds) (Fig. 1A). Second, anesthesia disrupted information processing within a neural network. Neurons shared the silent periods, resulting in synchronous population activity (neural oscillations), despite of the suppressed monosynaptic connectivity (Fig. 1B). The population activity (LFP) showed reduced information content (entropy), and was easily predicted by individual neurons; that is, shared information between individual neurons and population activity was significantly increased (Fig. 1C). Transfer entropy analysis revealed a strong directional influence from LFP to individual neurons, suggesting that neuronal activity is constrained to the synchronous population activity.

Conclusions: This study reveals how excitatory and inhibitory neurons are differentially affected by anesthetic, leading to synchronous population activity and impaired information processing. These findings provide an integrated understanding of anesthetic effects on neuronal activity and information processing. Further study of stimulus evoked activity and computational modeling will provide a more detailed mechanism of how anesthesia alters neural activity and disrupts information processing.

O12 Learning where to look: a foveated visuomotor control model

Emmanuel Daucé1, Pierre Albigès2, Laurent Perrinet3

1Aix-Marseille Univ, INS, Marseille, France; 2Aix-Marseille Univ, Neuroschool, Marseille, France; 3CNRS - Aix-Marseille Université, Institut de Neurosciences de la Timone, Marseille, France

Correspondence: Emmanuel Daucé (emmanuel.dauce@centrale-marseille.fr)

BMC Neuroscience 2019, 20(Suppl 1):O12

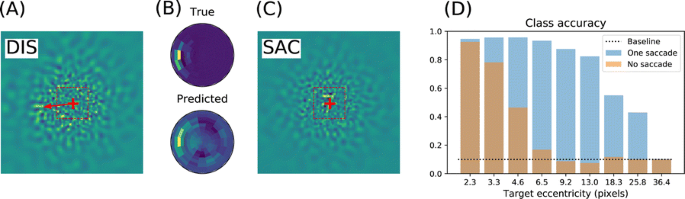

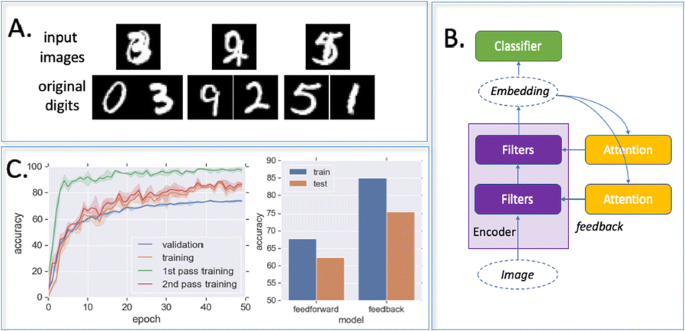

We emulate a model of active vision which aims at finding a visual target whose position and identity are unknown. This generic visual search problem is of broad interest to machine learning, computer vision and robotics, but also to neuroscience, as it speaks to the mechanisms underlying foveation and more generally to low-level attention mechanisms. From a computer vision perspective, the problem is generally addressed by processing the different hypothesis (categories) at all possible spatial configuration through dedicated parallel hardware. The human visual system, however, seems to employ a different strategy, through a combination of a foveated sensor with the capacity of rapidly moving the center of fixation using saccades. Visual processing is done through fast and specialized pathways, one of which mainly conveying information about target position and speed in the peripheral space (the “where” pathway), the other mainly conveying information about the identity of the target (the “what” pathway). The combination of the two pathways is expected to provide most of the useful knowledge about the external visual scene. Still, it is unknown why such a separation exists. Active vision methods provide the ground principles of saccadic exploration, assuming the existence of a generative model from which both the target position and identity can be inferred through active sampling. Taking for granted that (i) the position and category of objects are independent and (ii) the visual sensor is foveated, we consider how to minimize the overall computational cost of finding a target. This justifies the design of two complementary processing pathways: first a classical image classifier, assuming that the gaze is on the object, and second a peripheral processing pathway learning to identify the position of a target in retinotopic coordinates. This framework was tested on a simple task of finding digits in a large, cluttered image (see Fig. 1). Results demonstrate the benefit of specifically learning where to look, and this before actually identifying the target category (with cluttered noise ensuring the category is not readable in the periphery). In the “what” pathway, the accuracy drops to the baseline at mere 5 pixels away from the center of fixation, while issuing a saccade is beneficial in up to 26 pixels around, allowing a much wider covering of the image. The difference between the two distributions forms an “accuracy gain”, that quantifies the benefit of issuing a saccade with respect to a central prior. Until the central classifier is confident, the system should thus perform a saccade to the most likely target position. The different accuracy predictions, such as the ones done in the “what” and the “where” pathway, may also explain more elaborate decision making, such as the inhibition of return. The approach is also energy-efficient as it includes the strong compression rate performed by retina and V1 encoding, which is preserved up to the action selection level. The computational cost of this active inference strategy may thus be way less than that of a brute force framework. This provides evidence of the importance of identifying “putative interesting targets” first and we highlight some possible extensions of our model both in computer vision and modeling.

Simulated active vision agent: a Example retinotopic input. b Example network output (’Predicted’) compared with ground truth (’True’). c Accuracy estimation after saccade decision. d Orange bars: accuracy of a central classifier w.r.t target eccentricity; Blue bars: classification rate after one saccade (1000 trials average per eccentricity scale)

O13 A standardized formalism for voltage-gated ion channel models

Chaitanya Chintaluri1, Bill Podlaski2, Pedro Goncalves3, Jan-Matthis Lueckmann3, Jakob H. Macke3, Tim P. Vogels1

1University of Oxford, Centre for Neural Circuits and Behaviour, Oxford, United Kingdom; 2Champalimaud Center for the Unknown, Lisbon, Portugal; 3Research Center Caesar; Technical University of Munich, Bonn, Germany

Correspondence: Bill Podlaski (william.podlaski@gmail.com)

BMC Neuroscience 2019, 20(Suppl 1):O13

Biophysical neuron modelling has become widespread in neuroscience research, with the combination of diverse ion channel kinetics and morphologies being used to explain various single-neuron properties. However, there is no standard by which ion channel models are constructed, making it very difficult to relate models to each other and to experimental data. The complexity and scale of these models also makes them especially susceptible to problems with reproducibility and reusability, especially when translating between different simulators. To address these issues, we revive the idea of a standardised model for ion channels based on a thermodynamic interpretation of the Hodgkin-Huxley formalism, and apply it to a recently curated database of approximately 2500 published ion channel models (ICGenealogy). We show that a standard formulation fits the steady-state and time-constant curves of nearly all voltage-gated models found in the database, and reproduces responses to voltage-clamp protocols with high fidelity, thus serving as a functional translation of the original models. We further test the correspondence of the standardised models in a realistic physiological setting by simulating the complex spiking behaviour of multi-compartmental single-neuron models in which one or several of the ion channel models are replaced by the corresponding best-fit standardised model. These simulations result in qualitatively similar behaviour, often nearly identical to the original models. Notably, when differences do arise, they likely reflect the fact that many of the models are very finely tuned. Overall, this standard formulation facilitates be er understanding and comparisons among ion channel models, as well as reusability of models through easy functional translation between simulation languages. Additionally, our analysis allows for a direct comparison of models based on parameter settings, and can be used to make new observations about the space of ion channel kinetics across different ion channel subtypes, neuron types and species.

O14 A priori identifiability of a binomial synapse

Camille Gontier1, Jean-Pascal Pfister2

1University of Bern, Department of Physiology, Bern, France; 2University of Bern, Department of Physiology, Bern, Switzerland

Correspondence: Camille Gontier (gontier@pyl.unibe.ch)

BMC Neuroscience 2019, 20(Suppl 1):O14

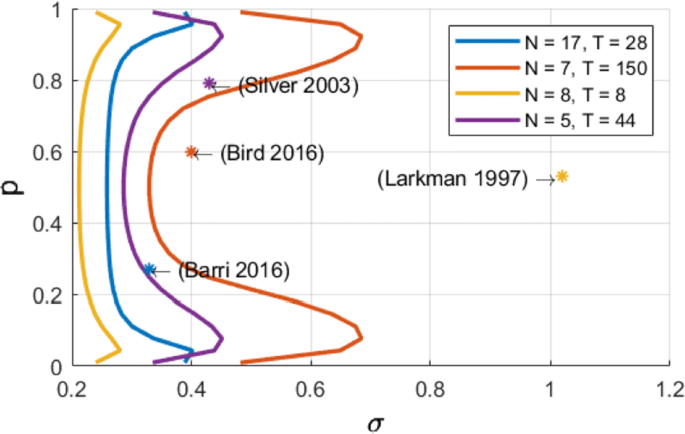

Synapses are highly stochastic transmission units. A classical model describing this transmission is called the binomial model [1], which assumes that there are N independent release sites, each having the same release probability p; and that each vesicle release gives rise to a quantal current q. The parameters of the binomial model (N, p, q, and the recording noise) can be estimated from postsynaptic responses, either by following a maximum-likelihood approach [2] or by computing the posterior distribution over the parameters [3].

But these estimates might be subject to parameter identifiability issues. This uncertainty of the parameter estimates is usually assessed a posteriori from recorded data, for instance by using re-sampling procedure such as parametric bootstrap.

Here, we propose a methodology for a priori quantifying the structural identifiability of the parameters. A lower bound on the error of parameter estimates can be obtained analytically using the Cramer-Rao bound. Instead of simply assessing a posteriori the validity of their parameter estimates, it is thus possible for experimentalists to select a priori a lower bound on the standard deviation of the estimates and to select the number of data points and to tune the level of noise accordingly.

Besides parameter identifiability, another critical issue is the so-called model identifiability, i.e. the possibility, given a certain number of data points T and a certain level of measurement noise, to find the model of synapse that fits our data the best. For instance, when observing discrete peaks on the histogram of post-synaptic currents, one might be tempted to conclude that the binomial model (“multi-quantal hypothesis”) is the best one to fit the data. However, these peaks might actually be artifacts due to noisy or scarce data points, and data might be best explained by a simpler Gaussian distribution (“uni-quantal hypothesis”).

Model selection tools are classically used to determine a posteriori which model is the best one to fit a data set, but little is known on the a priori possibility (in terms of number of data points or recording noise) to discriminate the binomial model against a simpler distribution.

We compute an analytical identifiability domain for which the binomial model is correctly identified (Fig. 1), and we verify it by simulations. Our proposed methodology can be further extended and applied to other models of synaptic transmission, allowing to define and quantitatively assess a priori the experimental conditions to reliably fit the model parameters as well as to test hypotheses on the desired model compared to simpler versions.

Published estimates of binomial parameters (dots), and corresponding identifiability domains (solid lines: the model is identifiable if, for a given release probability p, the recording noise does not exceed sigma). Applying our analysis to fitted parameters of the binomial model found in previous studies, we find that none of them are in the parameter range that would make the model identifiable

In conclusion, our approach aims at providing experimentalists objectives for experimental design on the required number of data points and on the maximally acceptable recording noise. This approach allows to optimize experimental design, draw more robust conclusions on the validity of the parameter estimates, and correctly validate hypotheses on the binomial model.

References

-

1.

Katz B. The release of neural transmitter substances. Liverpool University Press (1969): 5–39.

-

2.

Barri A, Wang Y, Hansel D, Mongillo G. Quantifying repetitive transmission at chemical synapses: a generative-model approach. eNeuro 2016 Mar;3(2).

-

3.

Bird AD, Wall MJ, Richardson MJ. Bayesian inference of synaptic quantal parameters from correlated vesicle release. Frontiers in computational neuroscience 2016 Nov 25; 10:116.

O15 A flexible, fast and systematic method to obtain reduced compartmental models.

Willem Wybo, Walter Senn

University of Bern, Department of Physiology, Bern, Switzerland

Correspondence: Willem Wybo (willem.a.m.wybo@gmail.com)

BMC Neuroscience 2019, 20(Suppl 1):O15

Most input signals received by neurons in the brain impinge on their dendritic trees. Before being transmitted downstream as action potential (AP) output, the dendritic tree performs a variety of computations on these signals that are vital to normal behavioural function [3, 8]. In most modelling studies however, dendrites are omitted due the cost associated with simulating them. Biophysical neuron models can contain thousands of compartments, rendering it infeasible to employ these models in meaningful computational tasks. Thus, to understand the role of dendritic computations in networks of neurons, it is necessary to simplify biophysical neuron models. Previous work has either explored advanced mathematical reduction techniques [6, 10] or has relied on ad-hoc simplifications to reduce compartment numbers [11]. Both of these approaches have inherent difficulties that prevent widespread adoption: advanced mathematical techniques cannot be implemented with standard simulation tools such as NEURON [2] or BRIAN [4], whereas ad-hoc methods are tailored to the problem at hand and generalize poorly. Here, we present an approach that overcomes both of these hurdles: First, our method simply outputs standard compartmental models (Fig 1A). The models can thus be simulated with standard tools. Second, our method is systematic, as the parameters of the reduced compartmental models are optimized with a linear least square fitting procedure to reproduce the impedance matrix of the biophysical model (Fig 1B). This matrix relates input current to voltage, and thus determines the response properties of the neuron [9]. By fitting a reduced model to this matrix, we obtain the response properties of the full model at a vastly reduced computational cost. Furthermore, since we are solving a linear least squares problem, the fitting procedure is well-defined—as there is a single minimum to the error function—and computationally efficient. Our method is not constrained to passive neuron models. By linearizing ion channels around wisely chosen sets of expansion points, we can extend the fitting procedure to yield appropriately rescaled maximal conductances for these ion channels (Fig 1C). With these conductances, voltage and spike output can be predicted accurately (Fig 1D, E). Since our reduced models reproduce the response properties of the biophysical models, non-linear synaptic currents, such as NMDA, are also integrated correctly. Our models thus reproduce dendritic NMDA spikes (Fig 1F). Our method is also flexible, as any dendritic computation (that can be implemented in a biophysical model) can be reproduced by choosing an appropriate set of locations on the morphology at which to fit the impedance matrix. Direction selectivity [1] for instance, can be implemented by fitting a reduced model to a set of locations distributed on a linear branch, whereas independent subunits [5] can be implemented by choosing locations on separate dendritic subtrees. In conclusion, we have created a flexible linear fitting method to reduce non-linear biophysical models. To streamline the process of obtaining these reduced compartmental models, work is underway on a toolbox (https://github.com/WillemWybo/NEAT) that automatizes the impedance matrix calculation and fitting process.

a Reduction of branch of stellate cell with compartments at 4 locations. b Biophysical (left) and reduced (middle) impedance matrices and error (right) at two holding potentials (top–bottom). c Somatic conductances. d Somatic voltage. e Spike coincidence factor between both models (1: perfect coincidence, 0: no coincidence—4 ms window). F res. g Same as d, but for green resp. blue site

References

-

1.

Branco T, Clark B, Hausser M. Dendritic discrimination of temporal input sequences in cortical neurons. Science Signaling 2010, Sept:1671–1675.

-

2.

Carnevale NT, Hines ML. The NEURON book 2004.

-

3.

Cichon J, Gan WB. Branch-specific dendritic Ca2+ spikes cause persistent synaptic plasticity. Nature 2015, 520(7546):180–185.

-

4.

Goodman DFM, Brette R. The Brian simulator. Frontiers in neuroscience 2009, 3(2):192– 7.

-

5.

Häusser M, Mel B. Dendrites: bug or feature? Current Opinion in Neurobiology 2003, 13(3):372–383.

-

6.

Kellems AR, Chaturantabut S, Sorensen DC, Cox SJ. Morphologically accurate reduced order modeling of spiking neurons. Journal of computational neuroscience 2010, 28(3):477–94.

-

7.

Koch C, Poggio T. A simple algorithm for solving the cable equation in dendritic trees of arbitrary geometry. Journal of neuroscience methods 1985, 12(4):303–315.

-

8.

Takahashi N, Oertner TG, Hegemann P, Larkum ME. Active cortical dendrites modulate perception. Science 2016, 354(6319):1587–90.

-

9.

Wybo WA, Torben-Nielsen B, Nevian T, Gewaltig MO. Electrical Compartmentalization in Neurons. Cell Reports 2019, 26(7):1759–1773.e7.

-

10.

Wybo WAM, Boccalini D, Torben-Nielsen B, Gewaltig MO. A Sparse Reformulation of the Green’s Function Formalism Allows Efficient Simulations of Morphological Neuron Models. Neural computation 2015, 27(12):2587–622.

-

11.

Traub RD, Pais I, Bibbig A, et al. Transient depression of excitatory synapses on interneurons contributes to epileptiform bursts during gamma oscillations in the mouse hippocampal slice. Journal of neurophysiology 2005 Aug;94(2):1225–35.

O16 An exact firing rate model reveals the differential effects of chemical versus electrical synapses in spiking networks

Ernest Montbrió1, Alex Roxin2, Federico Devalle1, Bastian Pietras3, Andreas Daffertshofer3

1Universitat Pompeu Fabra, Department of Information and Communication Technologies, Barcelona, Spain; 2Centre de Recerca Matemàtica, Barcelona, Spain; 3Vrije Universiteit Amsterdam, Behavioral and Movement Sciences, Amsterdam, Netherlands

Correspondence: Alex Roxin (aroxin@crm.cat)

BMC Neuroscience 2019, 20(Suppl 1):O16

Chemical and electrical synapses shape the collective dynamics of neuronal networks. Numerous theoretical studies have investigated how, separately, each of these types of synapses contributes to the generation of neuronal oscillations, but their combined effect is less understood. In part this is due to the impossibility of traditional neuronal firing rate models to include electrical synapses.

Here we perform a comparative analysis of the dynamics of heterogeneous populations of integrate-and-fire neurons with chemical, electrical, and both chemical and electrical coupling. In the thermodynamic limit, we show that the population’s mean-field dynamics is exactly described by a system of two ordinary differential equations for the center and the width of the distribution of membrane potentials —or, equivalently, for the population-mean membrane potential and firing rate. These firing rate equations exactly describe, in a unified framework, the collective dynamics of the ensemble of spiking neurons, and reveal that both chemical and electrical coupling are mediated by the population firing rate. Moreover, while chemical coupling shifts the center of the distribution of membrane potentials, electrical coupling tends to reduce the width of this distribution promoting the emergence of synchronization.

The firing rate equations are highly amenable to analysis, and allow us to obtain exact formulas for all the fixed points and their bifurcations. We find that the phase diagram for networks with instantaneous chemical synapses are characterized by a codimension-two Cusp point, and by the presence of persistent states for strong excitatory coupling. In contrast, phase diagrams for electrically coupled networks is determined by a Takens-Bogdanov codimension-two point, which entails the presence of oscillations and greatly reduces the presence of persistent states. Oscillations arise either via a Saddle-Node-Invariant-Circle bifurcation, or through a supercritical Hopf bifurcation. Near the Hopf bifurcation the frequency of the emerging oscillations coincides with the most likely firing frequency of the network. Only the presence of chemical coupling allows to shift (increase for excitation, and decrease for inhibition) the frequency of these oscillations. Finally, we show that the Takens-Bogdanov bifurcation scenario is generically present in networks with both chemical and electrical coupling.

Acknowledgement: We acknowledge support by the European Union’s Horizon 2020 research and innovation programme under the Marie Skłodowska Curie grant agreement No. 642563.

O17 Graph-filtered temporal dictionary learning for calcium imaging analysis

Gal Mishne1, Benjamin Scott2, Stephan Thiberge4, Nathan Cermak3, Jackie Schiller3, Carlos Brody4, David W. Tank4, Adam Charles4

1Yale University, Applied Math, New Haven, CT, United States of America; 2Boston University, Boston, United States of America; 3Technion, Haifa, Israel; 4Princeton University, Department of Neuroscience, Princeton, NJ, United States of America

Correspondence: Gal Mishne (gal.mishne@yale.edu)

BMC Neuroscience 2019, 20(Suppl 1):O17

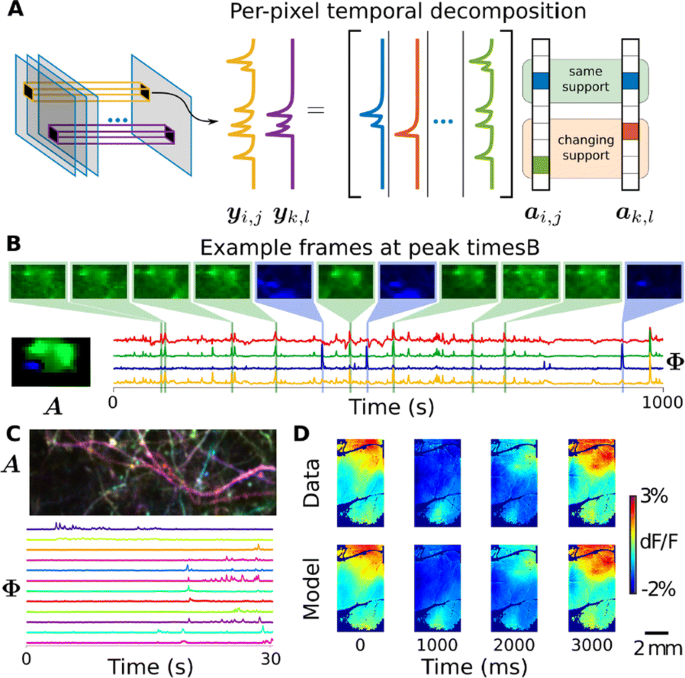

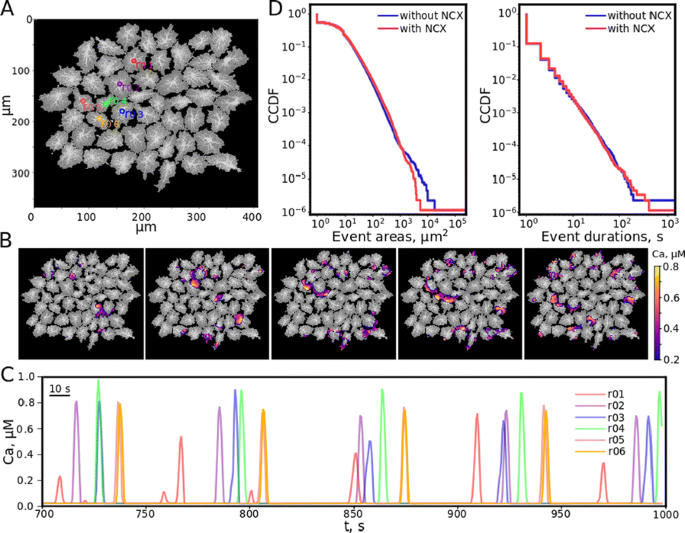

Optical calcium imaging is a versatile imaging modality that permits the recording of neural activity, including single dendrites and spines, deep neural populations using two-photon microscopy, and wide-field recordings of entire cortical surfaces. To utilize calcium imaging, the temporal fluorescence fluctuations of each component (e.g., spines, neurons or brain regions) must be extracted from the full video. Traditional segmentation methods used spatial information to extract regions of interest (ROIs), and then projected the data onto the ROIs to calculate the time-traces [1]. Current methods typically use a combination of both a-priori spatial and temporal statistics to isolate each fluorescing source in the data, along with the corresponding time-traces [2, 3]. Such methods often rely on strong spatial regularization and temporal priors that can bias time-trace estimation and that do not translate well across imaging scales.

We propose to instead model how the time-traces generate the data, using only weak spatial information to relate per-pixel generative models across a field-of-view. Our method, based on spatially-filtered Laplacian-scale mixture models [4,5], introduces a novel non-local spatial smoothing and additional regularization to the dictionary learning framework, where the learned dictionary consists of the fluorescing components’ time-traces.

We demonstrate on synthetic and real calcium imaging data at different scales that our solution has advantages regarding initialization, implicitly inferring number of neurons and simultaneously detecting different neuronal types (Fig. 1). For population data, we compare our method to a current state-of-the-art algorithm, Suite2p, on the publicly available Neurofinder dataset (Fig. 1C). The lack of strong spatial contiguity constraints allows our model to isolate both disconnected portions of the same neuron, as well as small components that would otherwise be over-shadowed by larger components. In the latter case, this is important as such configurations can easily cause false transients which can be scientifically misleading. On dendritic data our method isolates spines and dendritic firing modes (Fig. 1D). Finally, our method can partition widefield data [6] in to a small number of components that capture the scientifically relevant neural activity (Fig. 1E-F).